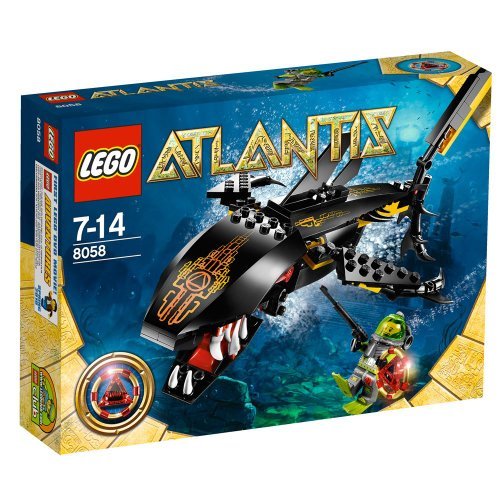

フクシマガリレイ 縦型冷凍庫 GRD-062FM 未使用 4ヶ月保証 2023年製 単相100V 幅610x奥行800 厨房【無限堂大阪店】

(税込) 送料込み

商品の説明

商品説明

| 商品の状態・程度 |

AA | 展示品・未使用品・アウトレット品。 当然美品ですが、展示や保管にともなう軽いキズなどはご容赦下さい。 |

|---|

※状態に関してはスタッフの主観もありますので、参考目安としてお考え下さい。当店取扱商品は基本的に中古品ですので神経質な方はご遠慮ください。

| 保証 |

保証期間 | 商品到着後 4ヶ月間保証 |

|---|---|

保証について | 修理代金が商品代金より高くなる場合は、返品・返金対応となる場合がございますので、予めご了承ください。 |

| 商品仕様 |

| メーカー | フクシマガリレイ |

|---|---|

| 型番 | GRD-062FM |

| 電源 | 単相100V |

| 周波数 | 50/60Hz |

| 消費電力 | 冷却時:165/165・霜取時:257/257W |

| 温度帯 | 冷凍:-20℃以下 |

| 内容量 | 冷凍:503L |

| 外形寸法 | W610 x D800x H1950mm |

| 重量 | 85kg |

| 注意事項 | 排水が出ます。排水接続が必要です。 |

| 詳細備考 | インバータ制御搭載 |

| 付属品 | 棚網4枚 |

| その他特記事項 |

| 状態ランク | AA 未使用品になります。 |

|---|---|

| 保証期間 | 商品到着後 4ヶ月間保証 |

| 在庫店舗 | 大阪店 |

| 送料について |

また、各都道府県の一部地域では、「配送できない」もしくは「各営業所止め」になる場合がございます。

上記理由によるキャンセルはお受け致しますので、発送前までにご連絡ください。

詳しくはお問い合わせください。

弊社指定トラック便での配送となります。

法人、個人事業主、店舗等の法人名、屋号、店舗名等が必ず必要となります。

宅急便サイズの場合は法人名、屋号、店舗名等は不要です。

※Yahoo!オークション等にてご質問の際に、住所詳細や電話番号を記載されますと不特定多数の方に公開されるため、

差し支えのない程度に簡略化してご記入いただくか、お電話でお問い合わせください。

※注意※

・弊社指定トラック便では、時間の指定が出来ません。

・送料は、商品各1点ずつにかかります。また、すべて税込価格になります。

・法人のお客様の場合は、ご希望頂いた住所(会社、工場など)でのお渡しをさせて頂きます。

・一般のお客様の場合は、ご自宅への配送は出来ません。一般のお客様の場合、宅急便サイズの商品は玄関口までの配送可能です。

その他の商品は、原則お近くの営業所止めでの発送となりますのでご了承下さいませ。

ただし下記注意事項に該当する場合は、法人等のお客様の場合でも、

ご指定場所での引渡しが出来ませんので、ご注意下さい。

・4トン以上のトラックで伺いますので、現地の道路幅等の状況により、

納品先の前にトラックを駐停車出来ない場合がございます。

・商品は車上渡し(搬入なし)となり、荷降ろしの際にドライバーの手伝いはありません。

荷降ろしに十分な人数、又はフォークリフトをご準備下さい。

・受け渡し時に起きた事故につきましては、当社では責任を負いかねますのでご注意ください。

・必ず配達時にお立会い頂きますよう宜しくお願い致します。

お客様不在時で、商品のお渡しが出来なかった場合は別途配送料金及び商品保管料金がかかりますのでご注意ください。

個人宅への配送について

弊社指定トラック便は法人向けの配送方法です。

個人宅への配送の場合、指定外トラック便の手配となるため、別途送料が発生し割高となる場合があります。

個人宅への配送を希望の際の送料は個別でお調べいたしますので、一度お問合せ下さい。

※法人のお客様は法人名・屋号・店舗名等を必ず、ご明記ください。

法人名・屋号がない場合、個人様の取り扱いとなります。ご注意ください。

北海道・沖縄への送料については、送料表では一律100,としていますが、実際には別途お見積となりますので、ご注意ください。自動返信の注文確認メール後、担当者よりご連絡いたします。

離島の場合も、別途お見積となります。

店頭での直接受渡しも可能です

※必ずお電話、メールにてご連絡お願いします

メリット1、送料が掛からないのでその分お得!

商品が大きければ大きいほど送料も高くなるのでオススメです。手数料も掛かりません。

メリット2、支払いも受け渡しも直接なので安全確実!

運送会社によるトラブルでの破損などの心配がありません。当店営業時間内で自分の都合に合わせられます。

メリット3、入金、発送の手間が省ける!

連絡して取りに行くだけなので面倒な手続きを丸ごと飛ばせます。

| ご購入に際してのご注意 |

商品の在庫につきまして

掲載の商品は、無限堂店頭、およびYahoo!オークション!、Yahoo!ショッピングでも並行して販売中です。同時にご購入されるなどして、ご希望の在庫が確保できない場合もございます。現時点での詳しい在庫状況につきましては、お電話にてお問合せ下さい。

商品の状態につきまして

複数在庫がある商品の傷・汚れ等は、平均的な状態の写真を掲載しています。写真と違う場所に傷・汚れ等が入った商品が届く可能性もございます。また、現在庫が1点の商品につきましても、過去に2点以上入荷があった場合は同様となりますので予めご了承ください。66800円フクシマガリレイ 縦型冷凍庫 GRD-062FM 未使用 4ヶ月保証 2023年製 単相100V 幅610x奥行800 厨房【無限堂大阪店】事務、店舗用品店舗用品

商品の情報

メルカリ安心への取り組み

お金は事務局に支払われ、評価後に振り込まれます

出品者

スピード発送

この出品者は平均24時間以内に発送しています